Project 4: Virtual Reality Model

The SHIPS Project in conjunction with Birmingham University HITT is developing a number of Virtual Reality (VR) models of shipwreck sites and now has a VR model of the A7 submarine. A VR model of the wreck site has also been created so more people can experience what it is like to visit the submarine as she is today on the seabed.

This facilitates the public ‘accessing’ the site remotely through VR, thereby rendering the site in effect as a virtual museum of the submarine’s exterior without any physical access being involved. This virtual dive concept has already been demonstrated to excellent effect in subsea training projects sponsored by the MoD and in a project entitled the Virtual Scylla, conducted in collaboration with the National Marine Aquarium in Plymouth.

The Virtual A7 project is being undertaken by the Human Interface Technologies Team based at the University of Birmingham. The three-dimensional (3D) computer tools and design procedures for the Virtual A7 construction process are based on those adopted for a number of previous projects, such as SubSafe, a project undertaken for the MoD which involved the use of Virtual Reality technologies and techniques for use in training new recruits to become familiar with the deck and compartment layouts and the location of safety-critical equipment onboard Trafalgar Class submarines.

The baseline 3D model of the A7 was developed using Autodesk’s 3ds Max (formerly 3D Studio Max). 3ds Max is a popular professional 3D modelling package used across the globe for developing content for high-fidelity computer-generated imagery, it is used for film and TV animation productions and is also widely exploited in the development of 3D models and scenes for Virtual Reality and computer games.

Virtual Reality model of A7 as she was in 1914

In addition to its modelling and animation tools, recent editions of 3ds Max also support advanced shading which is used for realistic lighting, shadowing and special effects and particle systems which are an important development for the rendering – ‘drawing’ – of underwater scenes. The tool benefits from strong international community support in the form of commercial plugins supporting a wide range of real-time rendering effects.

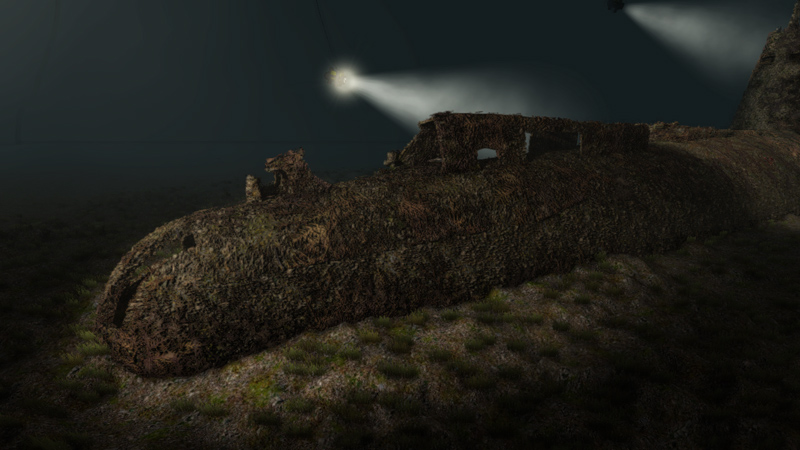

Video clip showing the VR model of the A7 submarine on the seabed. Click the image to start.

The baseline Virtual A7 model was constructed using plans and photographs collated from a variety of sources, including the SHIPS Project database, books, the Royal Navy Submarine Museum, online resources and even postcards sourced from eBay. The hull surface and component material effects for the completed model were based on information obtained from various subject matter experts and from the design of appropriate texture effects using such desktop imaging packages as Photoshop and Paint.net.

VR model of the A7 wreck site with the submarine partly buried in the seabed

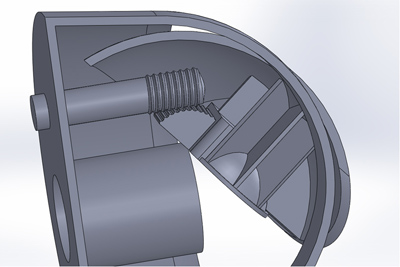

One particular region of the 3D model demanded additional attention, and that was the outer shutter mechanism of the torpedo tubes, the motion path for which could not be readily ascertained from images of the A Class submarines when out of water, trimmed up alongside depot ships, or when beached. To help with visualising this and, subsequently, developing a realistic bow door animation in 3ds Max and the chosen game engine (see below), an appropriate 3D mechanism (Fig. 78) was developed and tested using the Solidworks solid modelling computer-aided design system developed by Dassault Systèmes. This enabled a reasonable approximation of a door-opening animation to be developed.

Solidworks Model of A7 Bow Door Mechanism

To enable the users of the Virtual A7 program to explore the submarine and the wreck site in real time, all 3D and associated 2D assets (including textures used to endow the models with acceptable levels of visual detail) had to be imported into a game engine. In brief, a game engine is an integrated core of software modules that allow the contents of a computer game or simulation (2D/3D objects, images or textures, embedded videos, etc.) to be rendered and interacted with by end users using a range of input (controller) and output (display) technologies in real time. A typical game engine will also handle such features as 3D sound, object and environment physics (such as lighting, collisions and particle effects), animation, networking between multiple users, artificial intelligence, scripted behaviours of objects, including virtual humans or ‘avatars’, and surface/subsea terrain databases. The engine used for the Virtual A7 project is Unity; Unity is a cross- or multi-platform game engine and, as well as featuring its own plug-in Web Player for Windows and Apple Operating Systems, the product supports development for such operating systems and platforms as Apple’s iOS with support for the iPod, iPhone and iPad, Android, Windows, BlackBerry 10, Linux, PlayStation, Xbox and Nintendo’s Wii. The choice of Unity as the game engine for this particular project was based on such issues as:

- The experience of the development team in using Unity effectively for other VR/games-based simulation projects, including those conducted for Dstl/MoD;

- Unity’s user-friendly and powerful multi-window editing toolkit; a highly visual asset, helping to simplify game or simulation development workflow;

- A flexible import pipeline allowing for relatively straightforward import of 3D models, either custom-built (using tools such as 3ds Max, Maya, Blender, SketchUp, etc.) or from popular online 3D model resource sites, such as Turbosquid, 3D Cafe, and so on;

- The availability of a wide range of low-cost, sometimes free special-purpose tools and plugins from a dedicated online asset store (also a very active Unity Forum community);

- The fact that games and simulation run-times developed using Unity can be distributed licence-free, without the need for additional large software downloads or dedicated dongles;

- Support for a range of human interface devices, from head-mounted displays, such as the Oculus Rift and Samsung VR Gear, to popular input devices, such as the Xbox control or gaming pad, Kinect, LEAP and many others;

- Use of the tool by other, international navy (and submarine) communities, including the Royal Canadian Navy (e.g. for onboard awareness training of the Victoria class of submarine) and the Royal Australian Navy (for the Collins class).

Virtual reality model of the A7 Submarine as she is today, based on the hull survey completed by the A7 Project

Over time rust, debris, sediment and organic matter have transformed the A7’s hull and fin from its once smooth surface to, in effect, a randomly undulating rusty shell. To simulate this level of decay visually and convincingly, a range of 3D graphics techniques had to be employed. One such technique is ‘bump-mapping’, a method by which adding additional lighting information can be applied to a texture, thus creating the illusion of greater depth and undulations to the surface of the submarine. To further increase the close-up detail of the A7 site, its exterior hull has been augmented with 3D crustaceans, plant life and rocks located randomly over the surface. It was also necessary to randomise the rotational position and scale of these scattered surface objects in order to avoid the visual distraction of repeating patterns.

The dense particulate matter typically seen in underwater environments is recreated in the A7 wreck scenario by using 500 semi-transparent ‘billboard textures’ (i.e. flat images that always face towards the camera viewpoint). Each individual texture is an image comprising around 100 individual particles. This gives a visual impression of some 50,000 floating dust particles, whilst the simulation only has to calculate the position of 500. In addition, as with many subsea simulations, simple fogging is used to create the illusion of the underwater environment. Objects appear to fade into the distance as the light is attenuated through the dense water. This simple effect was enhanced by placing 3D cones emanating from each light source and endowing those cones with a semi-transparent animated texture.

This gives the appearance that the light sources are illuminating the microscopic dust particles within the water volume.

The virtual camera, which simulates the underwater viewpoint of the end user, has been enhanced with several visual effects. Firstly a ‘fish eye’ lens distortion effect was added to simulate the curved plastic dome that typically covers underwater cameras leading to a slight distortion of images. Secondly, a ‘depth of field’ effect was used, such that, by blurring objects that are very close or very far from the camera, it was possible to simulate the focusing limitations of underwater cameras. Finally, a dynamic brightness effect was used to simulate how real-world underwater cameras attempt to adjust to limited amounts of underwater lighting. Within the Unity runtime demonstration of the wreck site it is possible to optionally remove the sea life covering the hull, together with the seabed, thus revealing the original 3D model.

The VR model of the site showing the submarine partly buried in the seabed was created before the diving fieldwork started. This model was used to plan diving operations on the site, plan the tasks that needed to be done by the divers and was also used to train the divers in what to expect in the dark and low visibility conditions on the site.